In the company where I currently working, the process of development passes by 4 different environments:

- Local Environment: it’s where the developers usually develop their applications

- Dev Environment: an environment for upcoming or under development features. where all features are deployed and tested together

- Test Environment: an environment used by non developers, mainly sales teams and POs

- Production Environment

The process introduced the need to deploy the solution on multiple EC2 which unfortunately increased the development cost. Specially that we are using powerful EC2.

In order to reduce costs, the idea was simple : Shutdown the server when unused.

The first idea was to make servers available in a specific timeframe, like making them available between 9:00 AM and 6:00 PM. But personally I still believed there was a room for optimization. For example : why do the servers need to be available if the tester is on vacation? what if the person has other tasks to handle? …

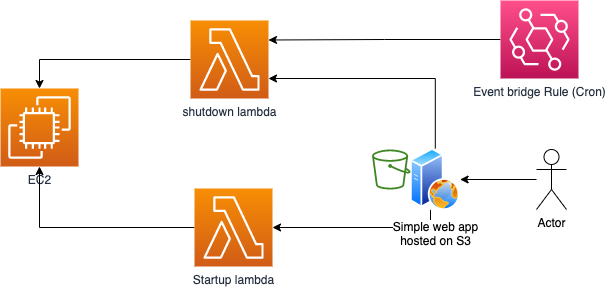

Proposed Architecture

My proposition was to have 2 simples APIs, one for server shutdown and one for startup. These APIs are triggered from a web interface. The shutdown’s one is also triggered by a cron job just in case the employee forgot it when he finishes. Finally, the server is configured with a startup script that runs on restart with root privilege.

Implementation

The process of implementation will be like this:

- Creating an IAM policy and Role

- Creating and configuring lambdas

- Configuring the event bridge

- Creating lambda’s client

- Configuring the cloud watch log

- Configuring the startup script

Creating IAM policy and Role

Choose role from IAM interface and add the following. Replace accountID and instanceId by your own.

The following policy gives the permission to start and stop specific instances, in addition to the ability to write logs to CloudWatch.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "*"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"ec2:StartInstances",

"ec2:StopInstances"

],

"Resource": "arn:aws:ec2:*:accountID:instance/i-instanceID"

}

]

} After that go to Role, select AWS service as a trusted entity and lambda as use case, choose the policy created and create the role

Create Lambda functions

As mentioned before 2 lambdas functions will be created. one for shutown and one for startup.

Let’s start by the shutdown.

- Create a Lambda function

- Choose Author from scratch

- Set runtime Node (the following code is tested on 12.X)

- Assign the role you created previously

- From advanced options choose enable function URL. This new feature will allow you to proceed without the need of an API gateway

- Set Auth type to NONE (internal app with non critical servers)

- Enable CORS

- Set the following as a lambda code and deploy it:

const AWS = require('aws-sdk');

exports.handler = (event, context, callback) => {

var instanceId = null;

var instanceRegion = null;

if(event.instanceRegion != null && event.instanceId != null){

instanceId = event.instanceId;

instanceRegion = event.instanceRegion;

} else {

var obj = JSON.parse(event.body);

instanceId = obj.instanceId;

instanceRegion = obj.instanceRegion;

}

const ec2 = new AWS.EC2({ region: instanceRegion });

ec2.stopInstances({ InstanceIds: [instanceId] }).promise()

.then(() => callback(null, `Successfully stopped ${instanceId}`))

.catch(err => callback(err));

}; For the startup lambda follow the same steps but add the following code:

const AWS = require('aws-sdk');

exports.handler = (event, context, callback) => {

var instanceId = null;

var instanceRegion = null;

if(event.instanceRegion != null && event.instanceId != null) {

instanceId = event.instanceId;

instanceRegion = event.instanceRegion;

} else {

var obj = JSON.parse(event.body);

instanceId = obj.instanceId;

instanceRegion = obj.instanceRegion;

}

const ec2 = new AWS.EC2({ region: instanceRegion });

ec2.startInstances({ InstanceIds: [instanceId] }).promise()

.then(() => callback(null, `Successfully started ${instanceId}`))

.catch(err => callback(err));

}; To test it following is the body of the request (replace instanceRegion and instanceID by yours):

{

"instanceRegion": "instanceRegion",

"instanceId": "i-instanceID"

} Creating Lambda's client

In order to invoke lambda you need a client. There are many ways to do it, but in order to keep it simple we will use a simple HTML/JS code. It’s a simple POST request. Feel free to use my boilerplate code from my github. The solution can be hosted on S3.

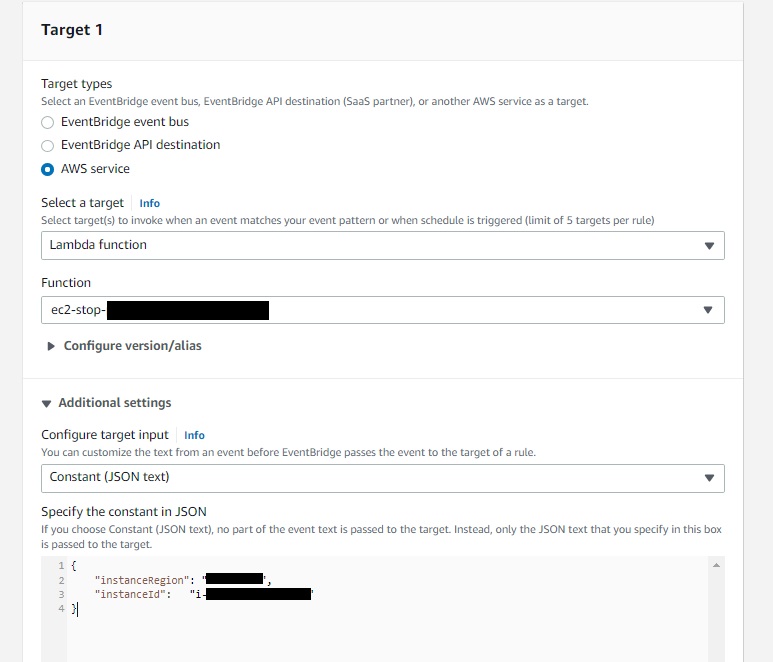

Configuring Event bridge

This step is a plan B in case the employee forgot to shutdown the server after testing.

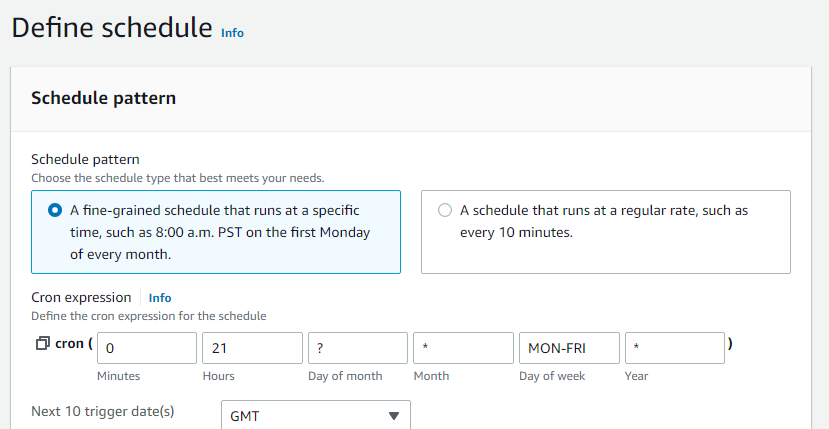

Create a new rule and set data as following:

For the cron pattern:

Configuring cloudwatch logs

When lambda’s functions are invoked, they will generate logs. Just make sure to set retention time to the values that suit your business. In order to do it: cloudWatch > Logs > your log path > Retention

Creating the startup script

You may need to run some scripts on startup in order to boot some services.

AWS provides something called EC2 user-data. By default this script runs on the creation of the machine with root privilege. It can be modified to make it run on restart. If you want to do it refer to the AWS article.

Personally, I used a feature on the ubuntu server, and created a service.

First, create the service file as in the template below in /etc/systemd/system

sudo nano /etc/systemd/system/servicefile.service

And the Template as:

[Unit] Description = ~Name of the service~ [Service] WorkingDirectory= ~directory of working file~ ExecStart= ~directory~/filename.sh [Install] WantedBy=multi-user.target

Start the service by

systemctl start servicefile.service

To enable on startup

systemctl enable servicefile.service

Cost Assessment

The beauty here is that all the components used are serverless. The cost of this integration won’t surpass 1$. A very small price to pay when comparing it to the bill of the unused servers.