Recently I received a project where I needed to update the integration between an ecommerce platform and a third party to handle the delivery orders. In the following article, I will explain the way I thought and highlight the technical decisions taken.

Requirements

Before showing the architecture, let’s understand the current architecture, the requirements and the scenarios to handle:

- The legacy code is a big monolithic application already hosted on AWS

- There’s no clear pattern of the orders workload, for example a campaign can be launched at midnight and the number of orders will increase suddenly. But it’s granted due workflow constraints that the throughput won’t surpass the 3000 orders per second

- The high availability of third parties’ servers isn’t guaranteed

- A retry after a few seconds of failure to send to third parties can solve the issue

- Third parties’ updates are received through a webhook

- There’s a different delivery vocabulary used, by example one will call a delivered order “complete”, the other “delivered”

- In case of integration issues, the developers need to be notified through slack

- In case of logistics issues, the concerned team needs to be notified by email

- Logs need to be saved for later investigations

Event driven Architecture

These requirements are a typical scenario for an event driven architecture.

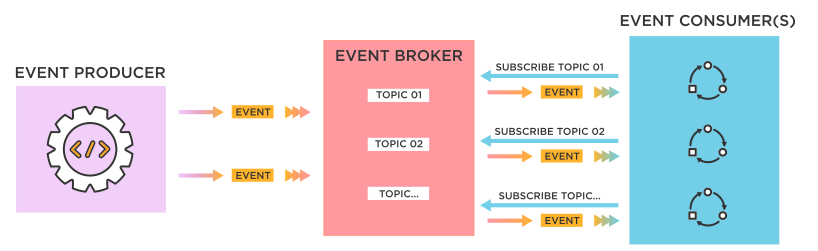

What is event driven? It is a software architecture paradigm promoting the production, detection, consumption of, and reaction to events. To simplify it, it’s like a process generating an action (called producer), and another process (called consumer) is reacting to this action, notification or event. The communication between these processes is asynchronous and indirect. It passes through an event broker which is in reality a queuing system.

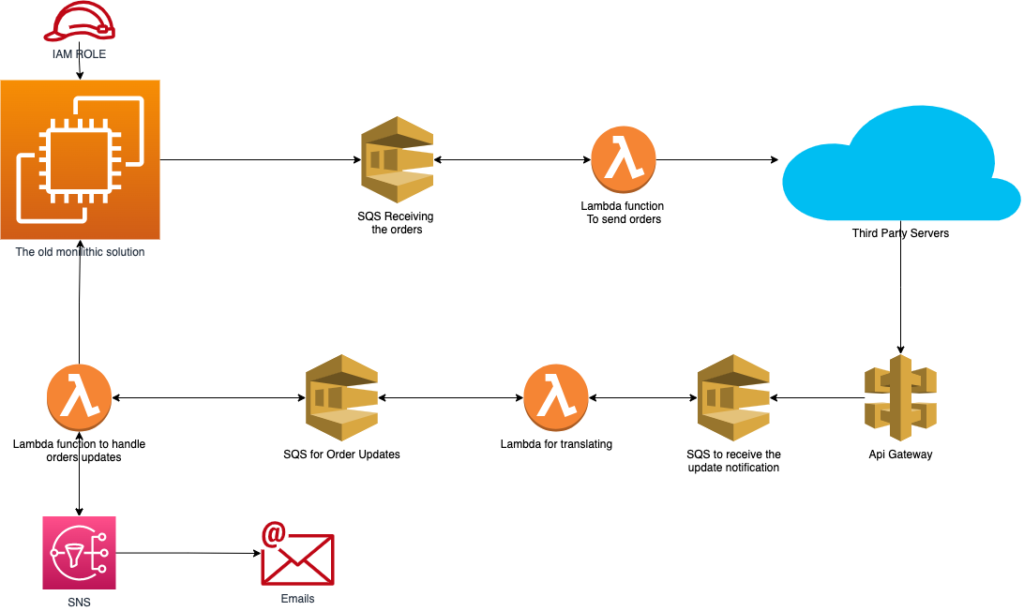

Architecture

There’s many ways to implement this architecture pattern, but for this project I chose to build it with serverless AWS components. The workload throughput won’t surpass 3000 orders per seconds so the Amazon SQS is more than enough as an event broker. SQS has the possibility to trigger an AWS lambda function. So technically the lambda function plays the role of the consumer. Anyone who pushes messages to the queue will be a producer. The full architecture will look like this:

The flow will be:

- The old solution will throw the orders into an SQS queue

- The queue will trigger a lambda function

- The lambda function will send the orders to the third-party system

- Third parties send notification updates

- These updates are saved in an SQS

- Orders are converted to proper action using lambda

- Then it is set in a different SQS

- Finally, a lambda function will take action into connecting to the original system, or send an email using SNS

Some will suggest that the lambda translating the action can do the job of the third lambda directly. From a technical perspective, yes, it’s possible but from separation of concerns point of view, it shouldn’t. Also, the current architecture enhances the reusability of code. A different integration can benefit from it by simply adding her proper “vocabulary translation”.

I thought about adding a queue between the lambda and the old system. But there’s nothing gained. The order queue is already ensuring the reattempts.

NB: the real architecture is slightly different, it contains more authentication and authorizations checkup. These information were removed for security purposes.

Access control, retrying and logging

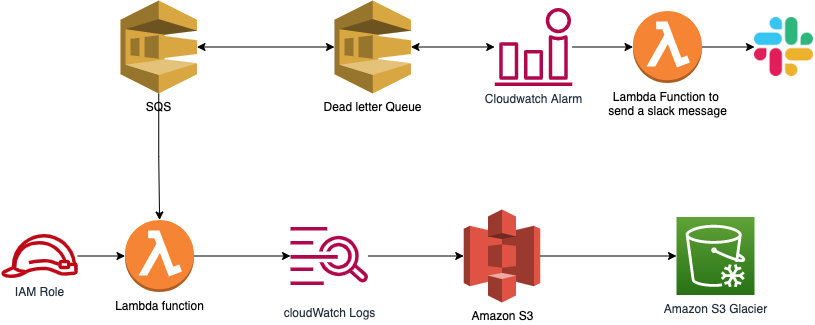

The access control is managed by IAM role. Every lambda has its own role. The IAM role provides the least privilege possible. It can only read/write from/to specific SQS.

Every queue has a dead letter queue configured with a maximum receives set. Lambda reads the message in the queue, if it fails, the message will return to queue to be re-executed. Once this cycle is repeated more than the maximum receives, the message will be thrown to the dead letter queue (DLQ).

In normal scenarios, DLQ should be empty. Any message in it means that an error happened. To monitor it, cloudwatch Alarm is set on SQS, specifically on “ApproximateNumberOfMessagesVisible” parameter. Once the alarm is on, a lambda function will be triggered. It will send a notification to a specific slack channel. After solving the issue, developers can benefit from the DLQ redirect feature to redirect the message from the DLQ to the original queue and reinitiate the cycle.

On the other hand, lambda generates logs. These logs are thrown to cloudwatch logs. It can be exported to S3, then using s3 lifecycle policies it can be transferred to S3 glacier (for long term and cheap storage).

Scalability, High availability and Cost optimization

The solution is using serverless components. These components are completely managed by AWS. AWS manages all the infrastructure to run our code on highly available, fault tolerant infrastructure, freeing our focus to be able to build differentiated backend services.

From a cost perspective, the solution is billed based on the resources used during the execution only. By example lambda is billed based on the duration it takes for the code to execute. There’s no need to allocate servers nor worry about a sudden workload increase.